The first ever Eyeo Festival was last June and the second iteration looks to be just as amazing as the last. Here’s a video of a presentation that I gave at Eyeo last year on using digital imagery to generate sound. I also have the HTML5 slideshow available (use the left and right arrow keys to navigate). A big thanks goes out to Dave Schroeder for creating Eyeo and sharing these videos.

Tag Archives: Documentation

Midnight Playground

Midnight Playground is an interactive, kinetic, installation by Peng Wu, Jack Pavlik, John Keston, and Analaura Juarez. Peng initiated and directed the idea, Jack built the jump rope robot, and Annalaura helped refine the concept and promote the piece. My role was to produce the music and track it to the still images that Peng had selected. I ended up making a one hour video with thirty minutes of the image from the moon followed by a four second transition into another thirty minutes with an image of Mars. To produce the sound I gave Peng a list of audio excerpts that had all been previously posted on AudioCookbook in One Synthesizer Sound Every Day. He picked the two that he thought would work the best and I went back to my original recordings and processed them specifically for the piece by adding some reverb and delay to enhance the spatial properties of the music. The piece will be on display in Gallery 148 at the Minneapolis College of Art and Design through January 29, 2012.

Chromatic Textures Shown at 6X6 #5: Mystery

On Wednesday, July 7, 2010 my piece Chromatic Textures was shown at 6X6 #5: Mystery, an exhibition at Ciné Lab in Athens, Georgia. My work was accepted along with five other artists, “…including Denton Crawford’s eyeballs, Aaron Oldenburg’s plunge into asphyxia, and a performance streamed live over the Internet from California.” Here’s my abstract for Chromatic Textures.

Chromatic Textures is a study on the synesthetic nature of our senses of sound and sight. Video input is used to produce generative musical phrases. The visual media is analyzed by the GMS (Gestural Music Sequencer) to create the musical forms in real-time. The software includes adjustable probability distribution maps for the scale and rhythm. Adjusting these settings allows familiar structures to emerge. The settings chosen for this piece cause notes within a particular scale to play more frequently, however, it is still possible for any note within the twelve-tone chromatic system to occur. As a result, dissonant or blue notes can be heard at rare instances throughout the piece.

Continue reading

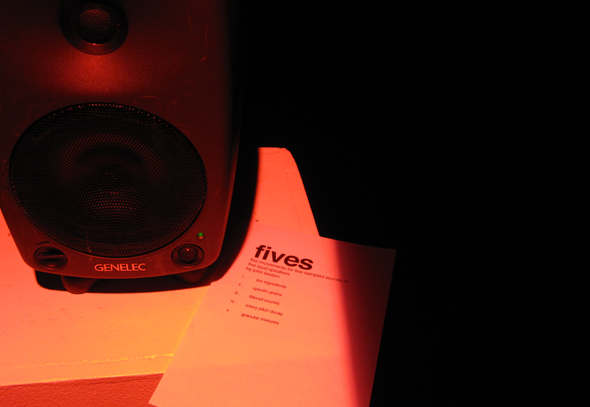

Five Movements for Five Sampled Sounds in Five Loud Speakers

On Tuesday, December 7, 2009 I presented a sound art installation titled, Fives, at the University of Minnesota. The subtitle of the work is, Five Movements for Five Sampled Sounds in Five Loud Speakers. To produce the sound for the work I developed an instrument designed to explore granular interpretations of digitized waveforms. The instrument was controlled over a wireless network with a multi-touch device. The sound objects generated were amplified through five distinct loud speakers arranged on pedestals at about chest height in a pentagonal configuration.

Continue reading